Sorry I've been gone for quite some time, but I've been out fishing in the very different waters of PHP and MySQL.

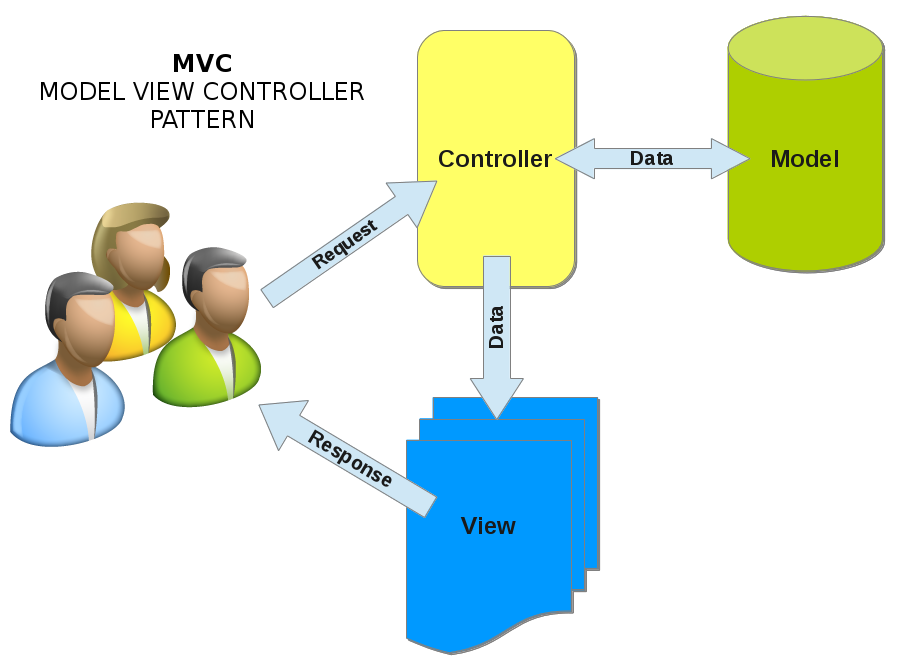

So one of the things I had fun doing was working with MVC design patterns and thought it would be fun to make

a very simple, extensible and easy to understand MVC for Perl. You can go out and find many writings and frameworks on this

subject but my intent here is to make this more of a day excursion as opposed to a life long journey. I also would like to start

working from the ground up, using just core Perl and its standard modules if possible. Hopefully after all this I will end up with an easy to use Perl

based framework that is easy to understand and extend as I need. read more...

Sunday, December 15, 2013

Friday, July 13, 2012

Is this speed boat or a row boat?

When I first started programming in PERL, you could say I was the subject of all types of qualified and unqualified code revues. This lead to me finding out that in PERL there is more than one way to do it, TIMTOWTDI if you prefer the acronym. So, how do you explain to a 20 year old veteran that just because he's been doing the code that way for years and it works, that its not necessarily true for this application? For that fact how do I know my own code is the correct direction to take?

There is no need to "Go Fish", PERL has a solution using one of its core modules Benchmark. This is a simple tool to evaluate PERL code snippets and see how fast they are running. A good place to start with is to single quote or to double quote, which is an argument that I have heard for many years, says to always use string literals over interpolated strings because interpolation requires extra processing. So to get this boat race started, I have put together a small script to see if we can use the Benchmark module to prove this or not.

# test.pl

#!/usr/bin/perl

use Benchmark qw(:all) ;

cmpthese(10000000, {

'literal' => sub{my $a = 'abcde'},

'interpolated' => sub{my $a = "abcde"},

});

We will use the cmpthese function to compare the two code snippets and see which one runs faster. As you can see, the cmpthese method uses two parameters, a large integer which is number of loops to run the code and a reference to a hash of code to be evaluated. Each key in the hash is a label for a referenced piece of code that will be compared.

[coder@perlbox ~]$ ./test.pl

Rate literal interpolated

literal 3984064/s -- -2%

interpolated 4065041/s 2% --

As you can see the results are not as expected, interpolated won, this is because not all systems were created or are running equally. As I referenced before, that older code works better is not necessarily true for all applications, means that everything the server is doing can impact performance measurements. But after running this test several times you will find out that literals do perform a little better in most cases.

The Benchmark module can also be used to establish the high water mark to gauge whether performance modifications to your code make sense, with this being said I hope it make my job of fishing for faster code easier.

Sunday, January 24, 2010

Recursion makes my brain hurt...

"So the other day I was at the local bar, working with recursion and..." I know we've all heard this joke before and it always ends in new and more imaginative ways to reduce a system to a quivering pile of uselessness. This got me wondering if there might be some standard nuts and bolts standards to avoid the pitfalls of writing recursive code. Most of the problems that I have encountered either stem from not giving my perl code a set of brakes or not understanding the data enough to know how my code going to react. So I have managed to waste more than a few hours and caused myself just as many headaches when it comes to recursion.

I remember Blake Williford, a colleague of mine, saying "...with recursive functions you should always process the degenerate case first...", this makes sense and might be what I'm looking for. The two pitfalls (degenerate cases I think) that come to mind is "run away code" and "circular references in the data". Both of these can end up with a "the never ending boat ride" or a "quivering pile of uselessness". So what do I mean by "run away code"? This is code that has no brakes:

# My run away boat

sub recurse{

my $num = shift;

$num++;

print $num."\n";

recurse($num);

}

In this case, the code will continue to add 1 to itself till it sails off the edge of the world. This is generally the point where I will hit the largest integer the system can handle or the recursion times out. So as my perl code sails over the edge and dies, we should be able see the perl interpreter complaining about large integers and/or deep recursion. As a cautionary note, if you were to couple the above code with a fork(), system(), and/or exec() like operations, the code would run till it consumes some critical system resource. At which time your system will suddenly burst into flames and crash into a quivering pile(well maybe not the flames part). At any case I need to throw the anchor out and stop my code before it gets to far away:

# my degenerate function

sub recurse{

my $num = shift;

# set how our limit

my $biggest_num = 999999999999;

# throw out the anchor if over ther limit

# AKA test the degenerate case

return if($num > $biggest_num);

$num++;

print $num."\n";

recurse($num);

}

I can see two advantages with this approach, first only the least amount code is executed before returning. Secondly my code won't be sailing for the edge, it now knows where to drop anchor. But I do wonder if this will be so obvious when you start adding data and more logic to my perl code. So lets make things a little more interesting and try to process a bit more realistic data:

my @family_tree = (

{

'name' => 'warren',

'relation' => 'self',

'parents' => 'bob,barbara'

},

{

'name' => 'bob',

'relation' => 'father',

'parents' => 'robert,barb'

},

{

'name' => 'robert',

'relation' => 'grandfather',

'parents' => 'gert,william'

},

{

'name' => 'barb',

'relation' => 'grandmother',

'parents' => 'julie,sam'

},

{

'name' => 'barbara',

'relation' => 'mother',

'parents' => 'warren,marry'

},

{

# my namesake

'name' => 'warren',

'relation' => 'grandfather',

'parents' => 'jack,jill'

},

{

'name' => 'marry',

'relation' => 'grandmother',

'parents' => 'oliver,dorothy'

},

{

'name' => 'john',

'relation' => 'son',

'parents' => 'warren,samatha'

}

);

Next I would like to watch how a recursive function might explore my family tree. Since we have a small data set my code can't go to far before it runs out of things to process so I'm only validating the data for my degenerate cases . But if the data set was much larger I would need to puts some limits on it(bring the boat anchor).

# get my all of sons hash references and feed it to the tree walker

walk_tree(grep($_->{'relation'} eq 'son',@family_tree));

sub walk_tree{

my $person = shift;

# return not a valid person

return unless(defined $person->{'name'}

&& defined $person->{'relation'});

# tell the world who I am

print "\n";

print 'Hello my name is '.$person->{'name'};

print ' and I\'m Warren\'s '.$person->{'relation'}."\n";

# process the parents or return there is none

if(defined $person->{'parents'}){

my @parent_names = ();

@parent_names = split /,/, $person->{'parents'};

print 'My parents are: '.join(" and ",@parent_names)."\n";

foreach my $parent_name(@parent_names){

print 'I\'m checking '.$parent_name."'s lineage\n";

walk_tree(grep($_->{'name'} eq $parent_name,@family_tree));

}

}

}

If you actually run the code, you will see this is actually an example of my second case "circular references in the data", and as you will also see has determined that I'm my own grandfather. I have also created a logical and maybe even a temporal paradox that my perl script is unable to resolve without manual intervention(^C). But despite my cataclysmic coding error there's still some good news, this code is only juggling the same pointers around and not creating new ones, so all I have is run away code that is going around the world to the left(maybe right). So I need to figure some other degenerate case and so to speak throw a bucket of water on this type of behaviour.

# get my all of sons hash references and feed it to the tree walker

walk_tree(grep($_->{'relation'} eq 'son',@family_tree));

sub walk_tree{

my $person = shift;

my $state = shift;

# return not a valid person

return unless(defined $person->{'name'}

&& defined $person->{'relation'});

# tokenize some data that makes me unique

# and store it's state

push(@{$state},$person->{'name'}.$person->{'relation'});

# tell the world who I am

print "\n";

print 'Hello my name is '.$person->{'name'};

print ' and I\'m Warren\'s '.$person->{'relation'}."\n";

# process the parents or return there is none

if(defined $person->{'parents'}){

my @parent_names = ();

@parent_names = split /,/, $person->{'parents'};

print 'My parents are: '.join(" and ",@parent_names)."\n";

foreach my $parent_name(@parent_names){

# get list of all people with same name as parent.

my @people =();

push @people, grep($_->{'name'} eq $parent_name,@family_tree);

foreach my $me(@people){

# see if we've already checked this person

my $used = scalar

grep($me->{'name'}.$me->{'relation'} eq $_,@{$state});

unless($used){

print 'I\'m checking '.$me->{'name'}."'s lineage\n";

walk_tree($me,$state);

}

}

}

}

}

We can now track the state of who's already been looked at and make sure we don't recurse on them again. Now that my script works as expected it is far from over, consider we can't always guarantee our data is clean or in the right order. This leave the possibility of more degenerate cases but I'm not going to entertain them now, because as mentioned this stuff makes my brain hurt. However for though that wish to go swimming these waters, please comment and show me some examples of what your thinking of, for as of right now I've only entertained the one standard.

And for all you more experienced coders that are wondering why such a rant over this trivial aspect of coding? I'll just say I've seen a few fledgling system administrators render some very large production systems useless (myself included) with this exact weapon. This post I hope can also be a cautionary old fishermen's yarn for all those newbie perl developers and system administrators out there that might be playing with fire. Hopefully my ranting on recursion will be useful for anyone who is thinking about jumping into the deep end of the recursion pool.

Sunday, January 17, 2010

You shouldn't swim in TAINTed waters.

Most people know it's not safe to swim in shark infested waters, so why would you do the same thing with your perl code? As we all know there's all types of well meaning and not so well meaning sharks trolling out there in the waters of the world wide web. Add to this, some sources say, that numbers as high 80% of security breaches and hacks are internal in origin, some may begin to think its just best to stay out of the water. There is good news though, for when it comes to perl, you can just ask it to help with plugging up some of its own holes by using perl's taint feature.

So how do you use this really cool feature in perl? Its as simple as adding "-T" switch to your shebang statement in your code or to the interpreter if your running from the command line.

So your asking yourself, what does this person mean by "Tainted water"? Perl basically looks at certain aspects of your code, first do you use any functions that can compromise the system, next is any external data being used by those functions and also if the environment will allow arbitrary scripts to be executed by your code. So if any of the above condition exists in your code, then your data is considered tainted and perl will exit, complaining vigorously until you fix it. But if you would like a more precise definition of tainting, a good fishing spot is here, Perl Docs - perlsec. But for now lets consider this code snippet:

So now we know there is a hole in our code big enough for any shark to swim through, what do we do next? Like any sailor knows you grab the biggest bucket you can and start baling out the water. What I mean by this is we need to capture the data and look at it before we use it and the taint feature lets us use sub-expressions from perl's regular expression features to do this.

We now have a bucket, so how do we start bailing out the water? We don't, we shove the bucket in the hole to plug it up. At this point in time we have an expression that matches anything ( /(.*)/ ) and need to be more specific about looking at our data and start validating what's in it. Lets take a look at the new and improved real_cool.pl:

So how do you use this really cool feature in perl? Its as simple as adding "-T" switch to your shebang statement in your code or to the interpreter if your running from the command line.

#!/opt/local/bin/perl -TSo you ran out and did this on that brand new shiny piece of code you've been working on; then it burst into flames and came crashing down in a hail of environment or tainting errors, right? This is good it just means that you are indeed swimming in tainted waters and you need to plug the holes in your code.

or

[wm@perlbox ~]$ perl -T really_cool.pl

So your asking yourself, what does this person mean by "Tainted water"? Perl basically looks at certain aspects of your code, first do you use any functions that can compromise the system, next is any external data being used by those functions and also if the environment will allow arbitrary scripts to be executed by your code. So if any of the above condition exists in your code, then your data is considered tainted and perl will exit, complaining vigorously until you fix it. But if you would like a more precise definition of tainting, a good fishing spot is here, Perl Docs - perlsec. But for now lets consider this code snippet:

#!/opt/local/bin/perl -TFirst thing since we are using a backtick operation and executing code as if it were on the command line, we now have code that can compromise the system. Also we use a variable passed from an external source via the %ARGV hash that we have no control over. And lastly we are accessing a file in the script directory which could have access to other files with more sensitive data. So what happens when we run the code like this?

# real_cool.pl

my $value = $ARGV[0];

my $out = `cat error.log | mail -s 'error log' $value 2&>1`;

print $out;

IMPORTANT: Don't execute this code unless your sure the taint switch is in place and you understand what it's going to do if the taint switch is not in use!Luckily we were using the "-T" switch because this code would have deleted every thing your user has write access too or you would have killed the server if you were root. Also if this had been a cgi it could have deleted most of your website. This should underscore why using tainted data (aka water) can put some very serious security holes in your code.

[wm@perlbox ~]$ perl -T really_cool.pl '; rm -rf /'

Insecure dependency in `` while running with -T switch at real_cool.pl line 5.

So now we know there is a hole in our code big enough for any shark to swim through, what do we do next? Like any sailor knows you grab the biggest bucket you can and start baling out the water. What I mean by this is we need to capture the data and look at it before we use it and the taint feature lets us use sub-expressions from perl's regular expression features to do this.

sub un_taint{I know, your not familiar with regular expression and it makes your brain hurt, if this is the case I recommend you take some time and visit another favorite fishing holes of mine, Perl Docs - perlre. And for the others that are saying this functions does nothing, your partially right, but remember perl considers anything in the sub-expression to be not tainted and this is just our bucket.

my $tainted_data = shift;

$tainted_data =~ /(.*)/;

my $safer_data = $1;

return $safer_data;

}

We now have a bucket, so how do we start bailing out the water? We don't, we shove the bucket in the hole to plug it up. At this point in time we have an expression that matches anything ( /(.*)/ ) and need to be more specific about looking at our data and start validating what's in it. Lets take a look at the new and improved real_cool.pl:

#!/opt/local/bin/perl -TAs you can see we are now putting our tainted data in the bucket (aka un_taint()). We are also looking at the tainted data to see if it looks like a real email address ( /(\w{1}[\w-.]*)\@([\w-.]+)/ ) and if it does we return it to be used. The un_taint() function can also be used many times in our scripts by validating data with repeated elsif's. But there's a problem?

# real_cool.pl

my $value = un_taint($ARGV[0]);

my $out = `cat error.log | mail -s 'error log' $value 2&>1`;

print $out;

sub un_taint{

my $tainted_data = shift;

my $safer_data = undef;

if ($tainted_data =~ /(\w{1}[\w-.]*)\@([\w-.]+)/){

$safer_data = $1,'@',$2;

}

return $safer_data;

}

[wm@perlbox ~]$ perl -T really_cool.pl '; ls -l /etc/'If you remember at the beginning of my post I said, the taint feature will also look at %ENV hash to see if our script can view or execute any code on our system and is complaining about $ENV{PATH} specifically. All we need to is fix our environment a bit and we should be good to go.

Insecure $ENV{PATH} while running with -T switch at test.pl line 6.

#!/opt/local/bin/perl -TSo our script can no longer be flooded with tainted data and when it does it tells us about it. We are now using sub-expressions to validate our data before it is used by our system and making sure that our environment is safe from being exploited. These are basically the requirements that taint imposes on our code and our code has addressed them. As I mentioned earlier in this post these are just the basics of the taint features in perl and there is more detailed information about perl's these features and I recommend that you that some time to look at it. But for now this should be a good push it the right direction.

# real_cool.pl

$ENV{'PATH'} = '/usr/bin;/usr/loca/bin';

$ENV{'BASH_ENV'} = '';

my $value = un_taint($ARGV[0]);

my $out = `cat error.log | mail -s 'error log' $value 2&>1`;

print $out;

sub un_taint{

my $tainted_data = shift;

my $safer_data = undef;

if ($tainted_data =~ /(\w{1}[\w-.]*)\@([\w-.]+)/){

$safer_data = $1,'@',$2;

} else {

die('VIOLATION: "'.$tainted_data.'" is being passed as a parameter.');

}

return $safer_data;

}

Wednesday, January 13, 2010

Getting our toes wet?

Lets get this boat in the water, this is the first post of "Perl diving with the sharks...". I'm not what you would call a newbie to perl coding, I've been doing administrative scripts on various platforms and applications for about 18 years now. Also I'm not as familiar with using concepts like styles and best practices, but rather a kinda "If you want to put a nail in the mast, use a hammer..." approach to coding. But like all old fisherman I know a few tricks and also a bunch of the good places to go fishing.

This is not a structured course in perl, more like "catch of the day" coding problems. So I'm not sure of which direction these tidal currents will take us, but it should be an adventure for all. I will be talking about the tricks I've learned over time and some of the good references I've found to resolve my issues. And as I have said, I'm not an expert in all things perl, so for the perl gurus out there, please feel free to rebut and comment any thing that you feel might be constructive to the subject at hand. As a whole don't be shy about commenting, remember the only stupid question is the one you didn't ask and constructive criticism can always help.

Also my apologies for all the aquatic and fish references, but in light of the title of this blog, I think it is my duty to stick with this theme. This is my so to speak "Hook in cheek" style of bringing levity to what would other wise be just another boring technical piece of chum. So as I'm having fun writing about my adventures in perl, I hope you enjoy it and have fun with it also...

Subscribe to:

Posts (Atom)